2024

|

| Thoeni, Klaus; Hartmann, P; Berglund, Tomas; Servin, Martin: Edge protection along haul roads in mines and quarries: A rigorous study based on full-scale testing and numerical modelling. In: Journal of Rock Mechanics and Geotechnical Engineering, 2024, ISBN: 1674-7755. @article{Thoeni2024,

title = {Edge protection along haul roads in mines and quarries: A rigorous study based on full-scale testing and numerical modelling},

author = {Klaus Thoeni and P Hartmann and Tomas Berglund and Martin Servin},

doi = {https://doi.org/10.1016/j.jrmge.2024.10.005},

isbn = {1674-7755},

year = {2024},

date = {2024-10-11},

urldate = {2024-10-11},

journal = {Journal of Rock Mechanics and Geotechnical Engineering},

abstract = {Safety berms (also called safety bunds or windrows), widely employed in surface mining and quarry operations, are typically designed based on rules of thumb. Despite having been used by the industry for more than half a century and accidents happening regularly, their behaviour is still poorly understood. This paper challenges existing practices through a comprehensive investigation combining full-scale experiments and advanced numerical modelling. Focusing on a Volvo A45G articulated dump truck (ADT) and a CAT 773B rigid dump truck (RDT), collision scenarios under various approach conditions and different safety berm geometries and materials are rigorously examined. The calibrated numerical model is used to assess the energy absorption capacity of safety berms with different geometry and to predict a critical velocity for a specific scenario. Back analysis of an actual fatal accident indicated that an ADT adhering to the speed limit could not be stopped by the safety berm designed under current guidelines. The study highlights the importance of considering the entire geometry and the mass and volume of the material used to build the safety berm alongside the speed and approach conditions of the machinery. The findings of the study enable operators to set speed limits based on specific berm geometries or adapt safety berm designs to match speed constraints for specific machinery. This will reduce the risk of fatal accidents and improve haul road safety.},

keywords = {Algoryx, External},

pubstate = {published},

tppubtype = {article}

}

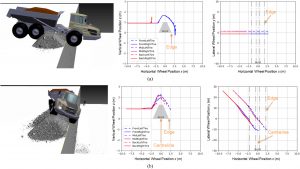

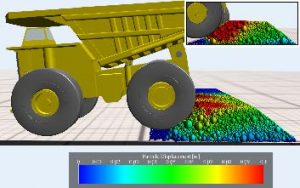

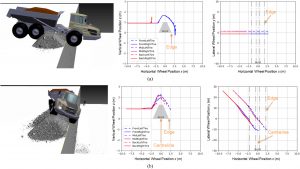

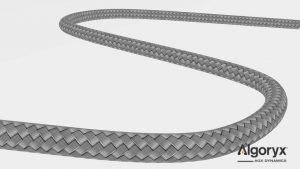

Safety berms (also called safety bunds or windrows), widely employed in surface mining and quarry operations, are typically designed based on rules of thumb. Despite having been used by the industry for more than half a century and accidents happening regularly, their behaviour is still poorly understood. This paper challenges existing practices through a comprehensive investigation combining full-scale experiments and advanced numerical modelling. Focusing on a Volvo A45G articulated dump truck (ADT) and a CAT 773B rigid dump truck (RDT), collision scenarios under various approach conditions and different safety berm geometries and materials are rigorously examined. The calibrated numerical model is used to assess the energy absorption capacity of safety berms with different geometry and to predict a critical velocity for a specific scenario. Back analysis of an actual fatal accident indicated that an ADT adhering to the speed limit could not be stopped by the safety berm designed under current guidelines. The study highlights the importance of considering the entire geometry and the mass and volume of the material used to build the safety berm alongside the speed and approach conditions of the machinery. The findings of the study enable operators to set speed limits based on specific berm geometries or adapt safety berm designs to match speed constraints for specific machinery. This will reduce the risk of fatal accidents and improve haul road safety. |

| Aoshima, Koji; Fälldin, Arvid; Wadbro, Eddie; Servin, Martin: World modeling for autonomous wheel loaders. In: Automation, vol. 5, iss. 3, pp. 259-281, 2024. @article{aoshima:2023:pmh,

title = {World modeling for autonomous wheel loaders},

author = {Koji Aoshima and Arvid Fälldin and Eddie Wadbro and Martin Servin},

url = {https://www.mdpi.com/2673-4052/5/3/16

https://www.mdpi.com/2673-4052/5/3/16/pdf

http://umit.cs.umu.se/wl-predictor/},

doi = {10.3390/automation5030016},

year = {2024},

date = {2024-07-07},

urldate = {2023-10-02},

journal = {Automation},

volume = {5},

issue = {3},

pages = {259-281},

abstract = {This paper presents a method for learning world models for wheel loaders performing automatic loading actions on a pile of soil. Data-driven models were learned to output the resulting pile state, loaded mass, time, and work for a single loading cycle given inputs that include a heightmap of the initial pile shape and action parameters for an automatic bucket-filling controller. Long-horizon planning of sequential loading in a dynamically changing environment is thus enabled as repeated model inference. The models, consisting of deep neural networks, were trained on data from a 3D multibody dynamics simulation of over 10,000 random loading actions in gravel piles of different shapes. The accuracy and inference time for predicting the loading performance and the resulting pile state were, on average, 95% in 1.2 ms and 97% in 4.5 ms, respectively. Long-horizon predictions were found feasible over 40 sequential loading actions.},

keywords = {Algoryx, External},

pubstate = {published},

tppubtype = {article}

}

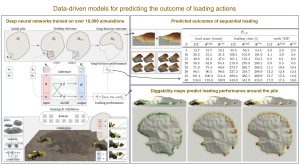

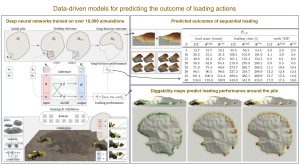

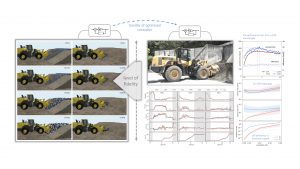

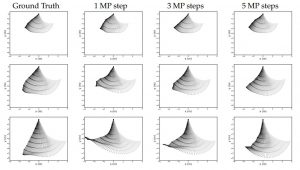

This paper presents a method for learning world models for wheel loaders performing automatic loading actions on a pile of soil. Data-driven models were learned to output the resulting pile state, loaded mass, time, and work for a single loading cycle given inputs that include a heightmap of the initial pile shape and action parameters for an automatic bucket-filling controller. Long-horizon planning of sequential loading in a dynamically changing environment is thus enabled as repeated model inference. The models, consisting of deep neural networks, were trained on data from a 3D multibody dynamics simulation of over 10,000 random loading actions in gravel piles of different shapes. The accuracy and inference time for predicting the loading performance and the resulting pile state were, on average, 95% in 1.2 ms and 97% in 4.5 ms, respectively. Long-horizon predictions were found feasible over 40 sequential loading actions. |

| Aoshima, Koji; Servin, Martin: Examining the simulation-to-reality gap of a wheel loader digging in deformable terrain. In: Multibody System Dynamics, 2024. @article{aoshima2023sim2real,

title = {Examining the simulation-to-reality gap of a wheel loader digging in deformable terrain},

author = {Koji Aoshima and Martin Servin},

url = {https://doi.org/10.1007/s11044-024-10005-5

https://arxiv.org/abs/2310.05765

http://umit.cs.umu.se/wl-sim-to-real/},

doi = {doi.org/10.1007/s11044-024-10005-5},

year = {2024},

date = {2024-06-30},

urldate = {2023-10-10},

journal = {Multibody System Dynamics},

abstract = {We investigate how well a physics-based simulator can replicate a real wheel loader performing bucket filling in a pile of soil. The comparison is made using field test time series of the vehicle motion and actuation forces, loaded mass, and total work. The vehicle was modeled as a rigid multibody system with frictional contacts, driveline, and linear actuators. For the soil, we tested discrete element models of different resolutions, with and without multiscale acceleration. The spatio-temporal resolution ranged between 50-400 mm and 2-500 ms, and the computational speed was between 1/10,000 to 5 times faster than real- time. The simulation-to-reality gap was found to be around 10% and exhibited a weak dependence on the level of fidelity, e.g., compatible with real-time simulation. Furthermore, the sensitivity of an optimized force feedback controller under transfer between different simulation domains was investigated. The domain bias was observed to cause a performance reduction of 5% despite the domain gap being about 15%.},

keywords = {Algoryx, External},

pubstate = {published},

tppubtype = {article}

}

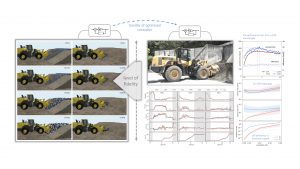

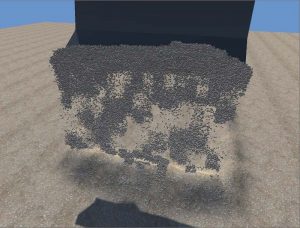

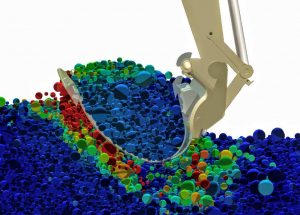

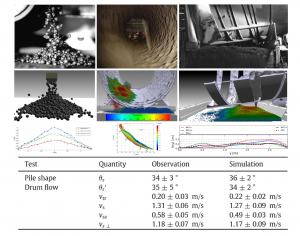

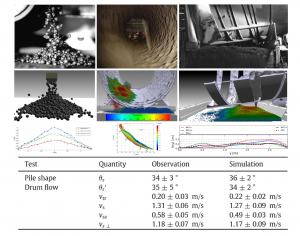

We investigate how well a physics-based simulator can replicate a real wheel loader performing bucket filling in a pile of soil. The comparison is made using field test time series of the vehicle motion and actuation forces, loaded mass, and total work. The vehicle was modeled as a rigid multibody system with frictional contacts, driveline, and linear actuators. For the soil, we tested discrete element models of different resolutions, with and without multiscale acceleration. The spatio-temporal resolution ranged between 50-400 mm and 2-500 ms, and the computational speed was between 1/10,000 to 5 times faster than real- time. The simulation-to-reality gap was found to be around 10% and exhibited a weak dependence on the level of fidelity, e.g., compatible with real-time simulation. Furthermore, the sensitivity of an optimized force feedback controller under transfer between different simulation domains was investigated. The domain bias was observed to cause a performance reduction of 5% despite the domain gap being about 15%. |

2022

|

| Marklund, Hannes: Expressibility of multiscale physics in deep networks. Department of Physics, Umeå University, 2022. @mastersthesis{itembibentry{marklund2022expressibility},

title = {Expressibility of multiscale physics in deep networks},

author = {Hannes Marklund},

url = {https://umu.diva-portal.org/smash/record.jsf?pid=diva2:1678353},

year = {2022},

date = {2022-06-10},

school = {Department of Physics, Umeå University},

abstract = {Motivated by the successes in the field of deep learning, the scientific community has been increasingly interested in neural networks that are able to reason about physics. As neural networks are universal approximators, they could in theory learn representations that are more efficient than traditional methods whenever improvements are theoretically possible. This thesis, done in collaboration with Algoryx, serves both as a review of the current research in this area and as an experimental investigation of a subset of the proposed methods. We focus on how useful these methods are as textit{learnable simulators} of mechanical systems that are possibly constrained and multiscale. The experimental investigation considers low-dimensional problems with training data generated by either custom numerical integration or by use of the physics engine AGX Dynamics. A good learnable simulator should express some important properties such as being stable, accurate, generalizable, and fast. Importantly, a generalizable simulator must be able to represent reconfigurable environments, requiring a model known as a graph neural network (GNN). The experimental results show that black-box neural networks are limited to approximate physics in the states it has been trained on. The results also suggest that traditional message-passing GNNs have a limited ability to represent more challenging multiscale systems. This is currently the most widely used method to realize GNNs and thus raises concern as there is not much to be gained by investing time into a method with fundamental limitations. },

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

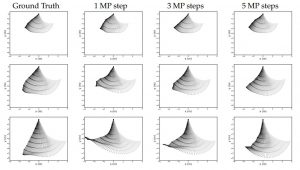

Motivated by the successes in the field of deep learning, the scientific community has been increasingly interested in neural networks that are able to reason about physics. As neural networks are universal approximators, they could in theory learn representations that are more efficient than traditional methods whenever improvements are theoretically possible. This thesis, done in collaboration with Algoryx, serves both as a review of the current research in this area and as an experimental investigation of a subset of the proposed methods. We focus on how useful these methods are as textit{learnable simulators} of mechanical systems that are possibly constrained and multiscale. The experimental investigation considers low-dimensional problems with training data generated by either custom numerical integration or by use of the physics engine AGX Dynamics. A good learnable simulator should express some important properties such as being stable, accurate, generalizable, and fast. Importantly, a generalizable simulator must be able to represent reconfigurable environments, requiring a model known as a graph neural network (GNN). The experimental results show that black-box neural networks are limited to approximate physics in the states it has been trained on. The results also suggest that traditional message-passing GNNs have a limited ability to represent more challenging multiscale systems. This is currently the most widely used method to realize GNNs and thus raises concern as there is not much to be gained by investing time into a method with fundamental limitations. |

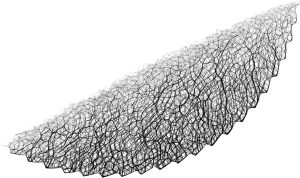

| Despaigne, Henrik: Fibre-based preconditioner for granular matter simulation. Department of Computing Science, Umeå University, 2022. @mastersthesis{Despaigne2022,

title = {Fibre-based preconditioner for granular matter simulation},

author = {Henrik Despaigne},

url = {https://www.diva-portal.org/smash/record.jsf?pid=diva2:1638655

https://www.diva-portal.org/smash/get/diva2:1638655/FULLTEXT01.pdf},

year = {2022},

date = {2022-02-18},

school = {Department of Computing Science, Umeå University},

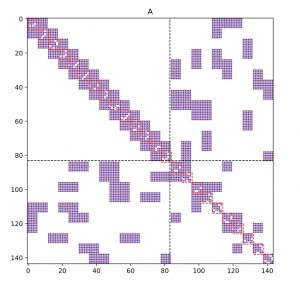

abstract = {Simulating granular media at large scales is hard to do because of ill-conditioning of the associated linear systems and the ineffectiveness of available iterative methods. One common way to improve iterative methods is to use a preconditioner which involves finding a good approximation of a linear system A. A good preconditioner will improve the condition number of A. If a linear system has a set of large eigenvalues of comparable magnitude, and the rest of the eigenvalues are small, so that the gap between the set of large eigenvalues and the small ones is large, the ill-conditioning caused by the small eigenvalues will not appear in the early iterations. We investigate a new fibre-based preconditioner that involves finding chains of contacting particles along the particles of a granular medium and reordering the system, which leads to a diagonal preconditioner. We show its effects on the relative residual and error of the velocity on linear systems where the ill-conditioning is caused by a big gap between a set of large eigenvalues and small eigenvalues for three differentiterative methods: Uzawa, the Conjugate Residual (CR) and the Minimum ResidualMethod (MINRES).},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

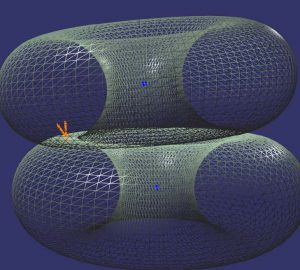

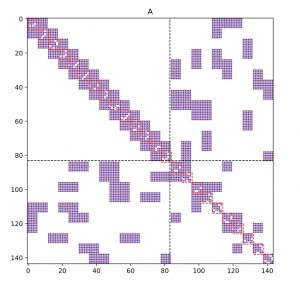

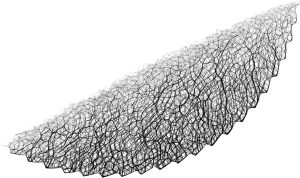

Simulating granular media at large scales is hard to do because of ill-conditioning of the associated linear systems and the ineffectiveness of available iterative methods. One common way to improve iterative methods is to use a preconditioner which involves finding a good approximation of a linear system A. A good preconditioner will improve the condition number of A. If a linear system has a set of large eigenvalues of comparable magnitude, and the rest of the eigenvalues are small, so that the gap between the set of large eigenvalues and the small ones is large, the ill-conditioning caused by the small eigenvalues will not appear in the early iterations. We investigate a new fibre-based preconditioner that involves finding chains of contacting particles along the particles of a granular medium and reordering the system, which leads to a diagonal preconditioner. We show its effects on the relative residual and error of the velocity on linear systems where the ill-conditioning is caused by a big gap between a set of large eigenvalues and small eigenvalues for three differentiterative methods: Uzawa, the Conjugate Residual (CR) and the Minimum ResidualMethod (MINRES). |

| Wiberg, Viktor; Wallin, Erik; Servin, Martin; Nordfjell, Tomas: Control of rough terrain vehicles using deep reinforcement learning. In: IEEE Robotics and Automation Letters, vol. 7, no. 1, pp. 390-397, 2022. @article{wiberg2021b,

title = {Control of rough terrain vehicles using deep reinforcement learning},

author = {Viktor Wiberg and Erik Wallin and Martin Servin and Tomas Nordfjell},

url = {https://arxiv.org/abs/2107.01867

http://umit.cs.umu.se/control_terrain/},

doi = {https://ieeexplore.ieee.org/document/9611016},

year = {2022},

date = {2022-01-01},

journal = {IEEE Robotics and Automation Letters},

volume = {7},

number = {1},

pages = {390-397},

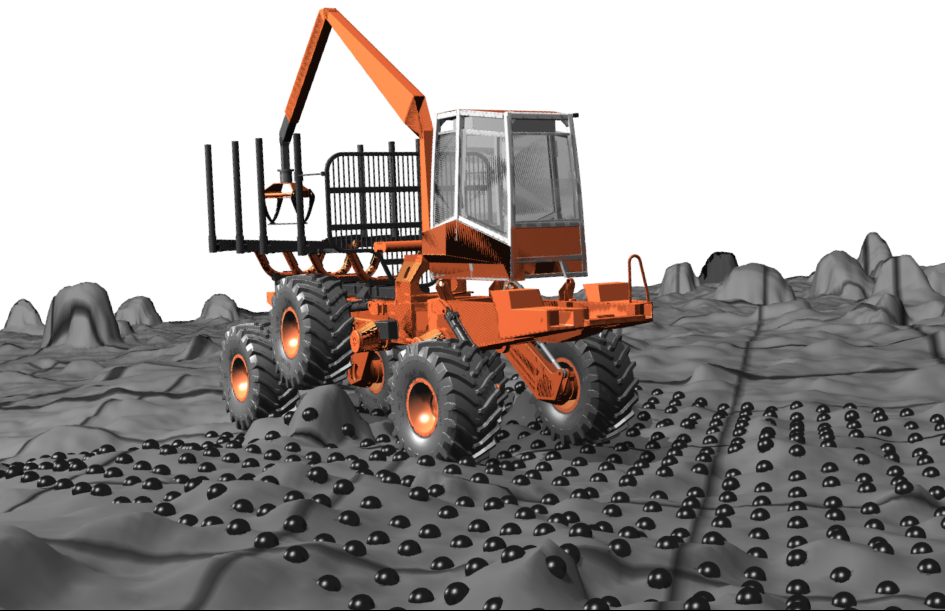

abstract = {We explore the potential to control terrain vehicles using deep reinforcement in scenarios where human operators and traditional control methods are inadequate. This letter presents a controller that perceives, plans, and successfully controls a 16-tonne forestry vehicle with two frame articulation joints, six wheels, and their actively articulated suspensions to traverse rough terrain. The carefully shaped reward signal promotes safe, environmental, and efficient driving, which leads to the emergence of unprecedented driving skills. We test learned skills in a virtual environment, including terrains reconstructed from high-density laser scans of forest sites. The controller displays the ability to handle obstructing obstacles, slopes up to 27°, and a variety of natural terrains, all with limited wheel slip, smooth, and upright traversal with intelligent use of the active suspensions. The results confirm that deep reinforcement learning has the potential to enhance control of vehicles with complex dynamics and high-dimensional observation data compared to human operators or traditional control methods, especially in rough terrain.

},

howpublished = { arXiv:2107.01867},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

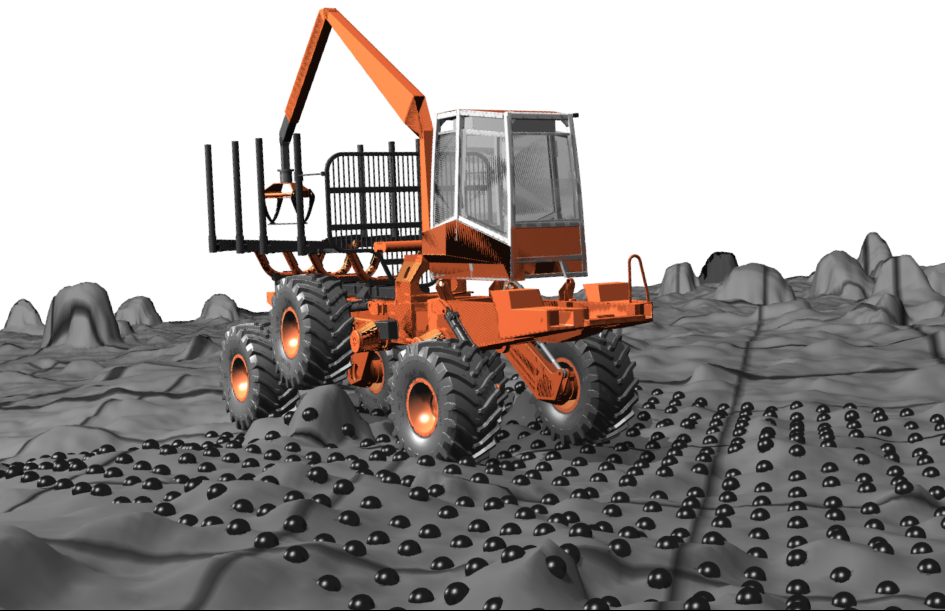

We explore the potential to control terrain vehicles using deep reinforcement in scenarios where human operators and traditional control methods are inadequate. This letter presents a controller that perceives, plans, and successfully controls a 16-tonne forestry vehicle with two frame articulation joints, six wheels, and their actively articulated suspensions to traverse rough terrain. The carefully shaped reward signal promotes safe, environmental, and efficient driving, which leads to the emergence of unprecedented driving skills. We test learned skills in a virtual environment, including terrains reconstructed from high-density laser scans of forest sites. The controller displays the ability to handle obstructing obstacles, slopes up to 27°, and a variety of natural terrains, all with limited wheel slip, smooth, and upright traversal with intelligent use of the active suspensions. The results confirm that deep reinforcement learning has the potential to enhance control of vehicles with complex dynamics and high-dimensional observation data compared to human operators or traditional control methods, especially in rough terrain.

|

2021

|

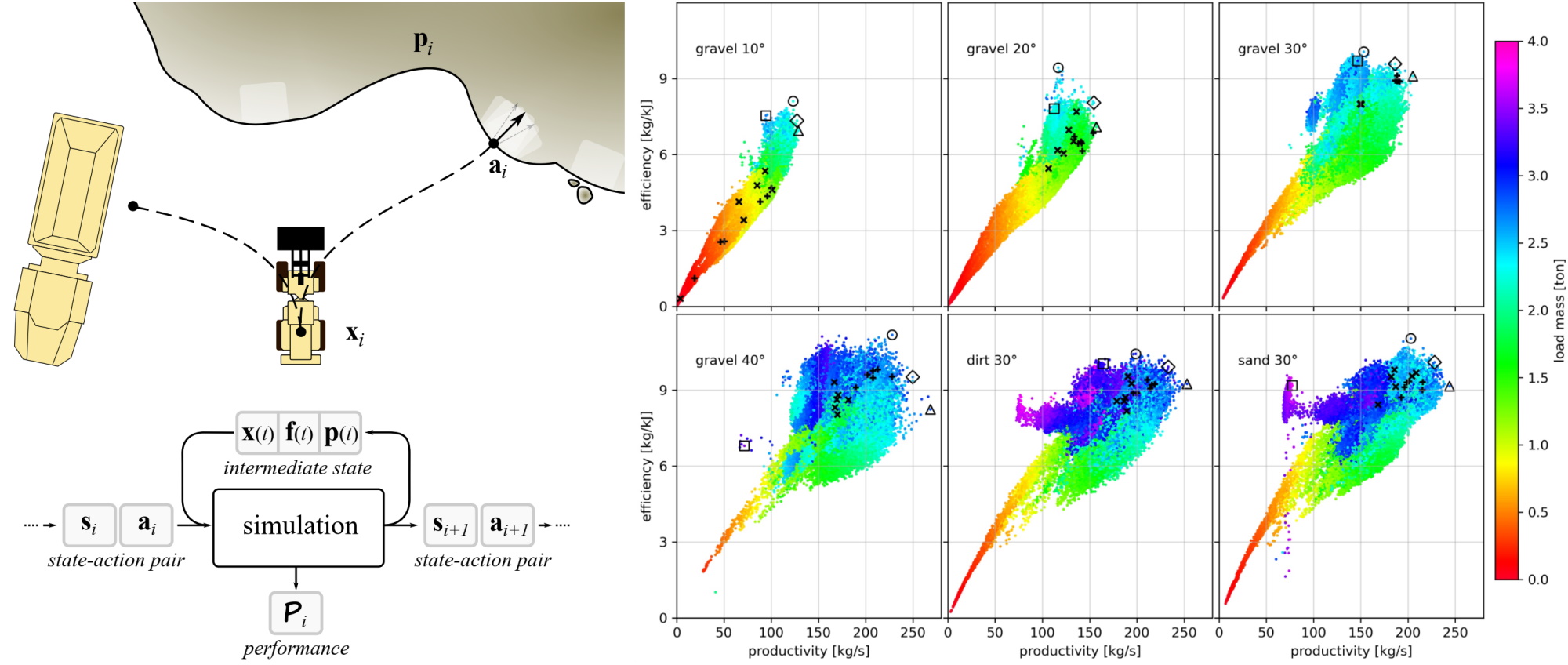

| Aoshima, Koji; Servin, Martin; Wadbro, Eddie: Simulation-Based Optimization of High-Performance Wheel Loading. Proceedings of the 38th International Symposium on Automation and Robotics in Construction (ISARC), International Association for Automation and Robotics in Construction (IAARC), 2021, ISBN: 978-952-69524-1-3. @conference{Aoshima2021,

title = {Simulation-Based Optimization of High-Performance Wheel Loading},

author = {Koji Aoshima and Martin Servin and Eddie Wadbro},

editor = {Feng, Chen and Linner, Thomas and Brilakis, Ioannis and Castro, Daniel and Chen, Po-Han and Cho, Yong and Du, Jing and Ergan, Semiha and Garcia de Soto, Borja and Gaparík, Jozef and Habbal, Firas and Hammad, Amin and Iturralde, Kepa and Bock, Thomas and Kwon, Soonwook and Lafhaj, Zoubeir and Li, Nan and Liang, Ci-Jyun and Mantha, Bharadwaj and Ng, Ming Shan and Hall, Daniel and Pan, Mi and Pan, Wei and Rahimian, Farzad and Raphael, Benny and Sattineni, Anoop and Schlette, Christian and Shabtai, Isaac and Shen, Xuesong and Tang, Pingbo and Teizer, Jochen and Turkan, Yelda and Valero, Enrique and Zhu, Zhenhua},

url = {https://www.iaarc.org/publications/2021_proceedings_of_the_38th_isarc/simulation_based_optimization_of_high_performance_wheel_loading.html

https://arxiv.org/abs/2107.14615

http://umit.cs.umu.se/hp_loading/},

doi = {10.22260/ISARC2021/009310.22260/ISARC2021/0093},

isbn = {978-952-69524-1-3},

year = {2021},

date = {2021-08-02},

booktitle = {Proceedings of the 38th International Symposium on Automation and Robotics in Construction (ISARC)},

pages = {688-695},

publisher = {International Association for Automation and Robotics in Construction (IAARC)},

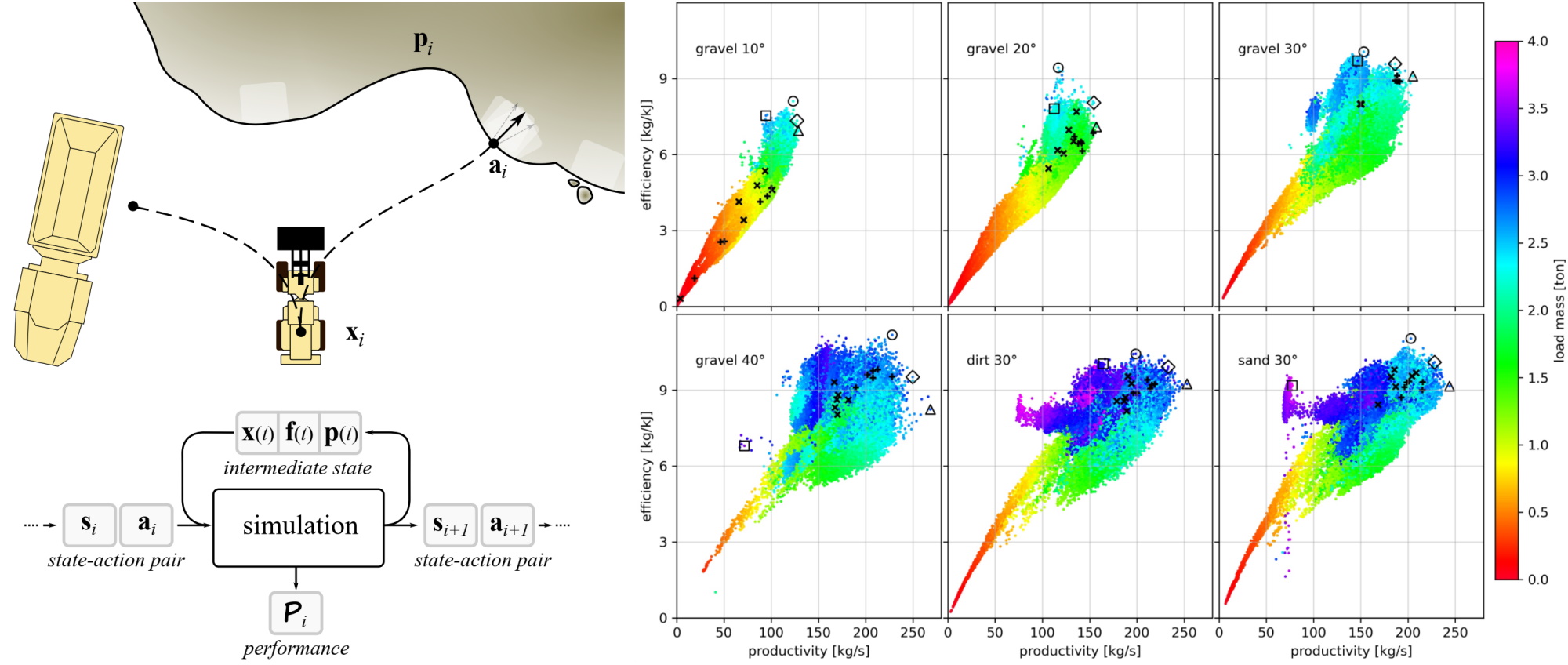

abstract = {Having smart and autonomous earthmoving in mind, we explore high-performance wheel loading in a simulated environment. This paper introduces a wheel loader simulator that combines contacting 3D multibody dynamics with a hybrid continuum-particle terrain model, supporting realistic digging forces and soil displacements at real-time performance. A total of 270,000 simulations are run with different loading actions, pile slopes, and soil to analyze how they affect the loading performance. The results suggest that the preferred digging actions should preserve and exploit a steep pile slope. High digging speed favors high productivity, while energy-efficient loading requires a lower dig speed.},

howpublished = {38th International Symposium on Automation and Robotics in Construction (ISARC), Dubai, UAE (2021). arXiv:2107.14615 },

keywords = {Algoryx, External},

pubstate = {published},

tppubtype = {conference}

}

Having smart and autonomous earthmoving in mind, we explore high-performance wheel loading in a simulated environment. This paper introduces a wheel loader simulator that combines contacting 3D multibody dynamics with a hybrid continuum-particle terrain model, supporting realistic digging forces and soil displacements at real-time performance. A total of 270,000 simulations are run with different loading actions, pile slopes, and soil to analyze how they affect the loading performance. The results suggest that the preferred digging actions should preserve and exploit a steep pile slope. High digging speed favors high productivity, while energy-efficient loading requires a lower dig speed. |

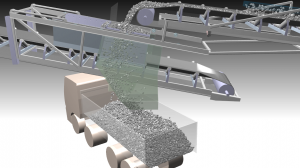

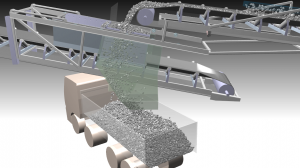

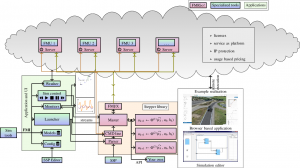

| Servin, Martin; Westerlund, Folke; Wallin, Erik: Digital twins with distributed particle simulation for mine-to-mill material tracking. In: Minerals, vol. 11, no. 5, pp. 524, 2021. @article{Servin2021b,

title = {Digital twins with distributed particle simulation for mine-to-mill material tracking},

author = {Martin Servin and Folke Westerlund and Erik Wallin},

url = {https://www.mdpi.com/2075-163X/11/5/524

https://arxiv.org/pdf/2104.09111.pdf},

doi = {https://doi.org/10.3390/min11050524},

year = {2021},

date = {2021-04-19},

journal = {Minerals},

volume = {11},

number = {5},

pages = {524},

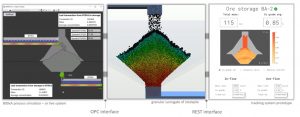

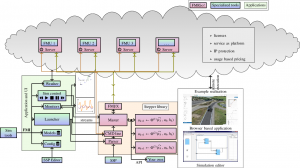

abstract = {Systems for transport and processing of granular media are challenging to analyse, operate and optimise. In the mining and mineral processing industries these systems are chains of pro- cesses with complex interplay between the equipment, control, and the processed material. The material properties have natural variations that are usually only known at certain locations. Therefore, we explore a material-oriented approach to digital twins with a particle representa- tion of the granular media. In digital form, the material is treated as pseudo-particles, each representing a large collection of real particles of various sizes, shapes and, mineral properties. Movements and changes in the state of the material are determined by the combined data from control systems, sensors, vehicle telematics, and simulation models at locations where no real sensors can see. The particle-based representation enables material tracking along the chain of processes. Each digital particle can act as a carrier of observational data generated by the equipment as it interacts with the real material. This makes it possible to better learn material properties from process observations, and to predict the effect on downstream processes. We test the technique on a mining simulator and demonstrate analysis that can be performed using data from cross-system material tracking.

},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

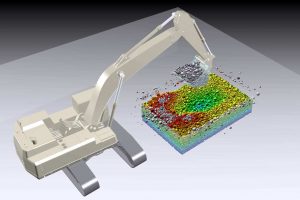

Systems for transport and processing of granular media are challenging to analyse, operate and optimise. In the mining and mineral processing industries these systems are chains of pro- cesses with complex interplay between the equipment, control, and the processed material. The material properties have natural variations that are usually only known at certain locations. Therefore, we explore a material-oriented approach to digital twins with a particle representa- tion of the granular media. In digital form, the material is treated as pseudo-particles, each representing a large collection of real particles of various sizes, shapes and, mineral properties. Movements and changes in the state of the material are determined by the combined data from control systems, sensors, vehicle telematics, and simulation models at locations where no real sensors can see. The particle-based representation enables material tracking along the chain of processes. Each digital particle can act as a carrier of observational data generated by the equipment as it interacts with the real material. This makes it possible to better learn material properties from process observations, and to predict the effect on downstream processes. We test the technique on a mining simulator and demonstrate analysis that can be performed using data from cross-system material tracking.

|

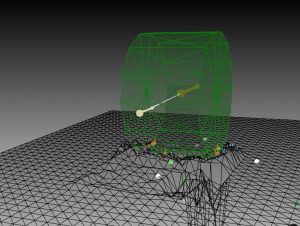

| Servin, Martin; Götz, Holger; Berglund, Tomas; Wallin, Erik: Towards a graph neural network solver for granular dynamics. VII International Conference on Particle-Based Methods (PARTICLES 2021), 2021. @conference{servintowards,

title = {Towards a graph neural network solver for granular dynamics},

author = {Martin Servin and Holger Götz and Tomas Berglund and Erik Wallin},

url = {https://www.researchgate.net/profile/Martin-Servin/publication/350089857_Towards_a_graph_neural_network_solver_for_granular_dynamics/links/6050749892851cd8ce445ca6/Towards-a-graph-neural-network-solver-for-granular-dynamics.pdf},

year = {2021},

date = {2021-03-01},

booktitle = {VII International Conference on Particle-Based Methods (PARTICLES 2021)},

abstract = {The discrete element method (DEM) is a versatile but computationally intensive method for granular dynamics simulation. We investigate the possibility of accelerating DEM simulations using graph neural networks (GNN), which automatically support variable connectivity between particles. This approach was recently found promising for particle-based simulation of complex fluids [1]. We start from a time-implicit, or nonsmooth, DEM [2], where the computational bottleneck is the process of solving a mixed linear complementarity problem (MLCP) to obtain the contact forces and particle velocity update. This solve step is substituted by a GNN, trained to predict the MLCP solution. Following [1], we employ an encoder-process-decoder structure for the GNN. The particle and connectivity data is encoded in an input graph with particle mass, external force, and previous velocity as node attributes, and contact overlap, normal, and tangent vectors as edge attributes. The sought solution is represented in the output graph with the updated particle velocities as node attributes and the contact forces as edge attributes. In the intermediate processing step, the input graph is converted to a latent graph, which is then advanced with a fixed number of message passing steps involving a multilayer perceptron neural network for updating the edge and node values. The output graph, with the approximate solution to the MLCP, is finally computed by decoding the last processed latent graph. Both a supervised and unsupervised method are tested for training the network on granular simulation of particles in a rotating or static drum. AGX Dynamics [3] is used for running the simulations, and Pytorch [4] in combination with the Deep Graph Library [5] for the learning. The supervised model learns from ground truth MLCP solutions, computed using a projected Gauss-Seidel (PGS) solver, sampled from 1200 simulations involving 50-150 particles. The unsupervised model learns to minimize a loss function derived from the MLCP residual function using particle configurations extracted from the same simulations but ignoring the approximate solution from the PGS solver. The simulation samples are split into training data (80%), validation data (10%), and test data (10%). Network hyperparameter optimization is performed. The supervised GNN solver reaches an error level of 1% for the contact forces and 0.01% on the particle velocities for a static drum. For a rotating drum, the respective errors are 10% and 1%. The unsupervised GNN solver reaches 1% velocity errors, 5% normal forces errors, but it has significant problems with predicting the friction forces. The latter is presumably because of the discontinuous loss function that follows from the Coulomb friction law and therefore we explore regularization of it. Finally, we discuss the potential scalability and performance for large particle systems.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {conference}

}

The discrete element method (DEM) is a versatile but computationally intensive method for granular dynamics simulation. We investigate the possibility of accelerating DEM simulations using graph neural networks (GNN), which automatically support variable connectivity between particles. This approach was recently found promising for particle-based simulation of complex fluids [1]. We start from a time-implicit, or nonsmooth, DEM [2], where the computational bottleneck is the process of solving a mixed linear complementarity problem (MLCP) to obtain the contact forces and particle velocity update. This solve step is substituted by a GNN, trained to predict the MLCP solution. Following [1], we employ an encoder-process-decoder structure for the GNN. The particle and connectivity data is encoded in an input graph with particle mass, external force, and previous velocity as node attributes, and contact overlap, normal, and tangent vectors as edge attributes. The sought solution is represented in the output graph with the updated particle velocities as node attributes and the contact forces as edge attributes. In the intermediate processing step, the input graph is converted to a latent graph, which is then advanced with a fixed number of message passing steps involving a multilayer perceptron neural network for updating the edge and node values. The output graph, with the approximate solution to the MLCP, is finally computed by decoding the last processed latent graph. Both a supervised and unsupervised method are tested for training the network on granular simulation of particles in a rotating or static drum. AGX Dynamics [3] is used for running the simulations, and Pytorch [4] in combination with the Deep Graph Library [5] for the learning. The supervised model learns from ground truth MLCP solutions, computed using a projected Gauss-Seidel (PGS) solver, sampled from 1200 simulations involving 50-150 particles. The unsupervised model learns to minimize a loss function derived from the MLCP residual function using particle configurations extracted from the same simulations but ignoring the approximate solution from the PGS solver. The simulation samples are split into training data (80%), validation data (10%), and test data (10%). Network hyperparameter optimization is performed. The supervised GNN solver reaches an error level of 1% for the contact forces and 0.01% on the particle velocities for a static drum. For a rotating drum, the respective errors are 10% and 1%. The unsupervised GNN solver reaches 1% velocity errors, 5% normal forces errors, but it has significant problems with predicting the friction forces. The latter is presumably because of the discontinuous loss function that follows from the Coulomb friction law and therefore we explore regularization of it. Finally, we discuss the potential scalability and performance for large particle systems. |

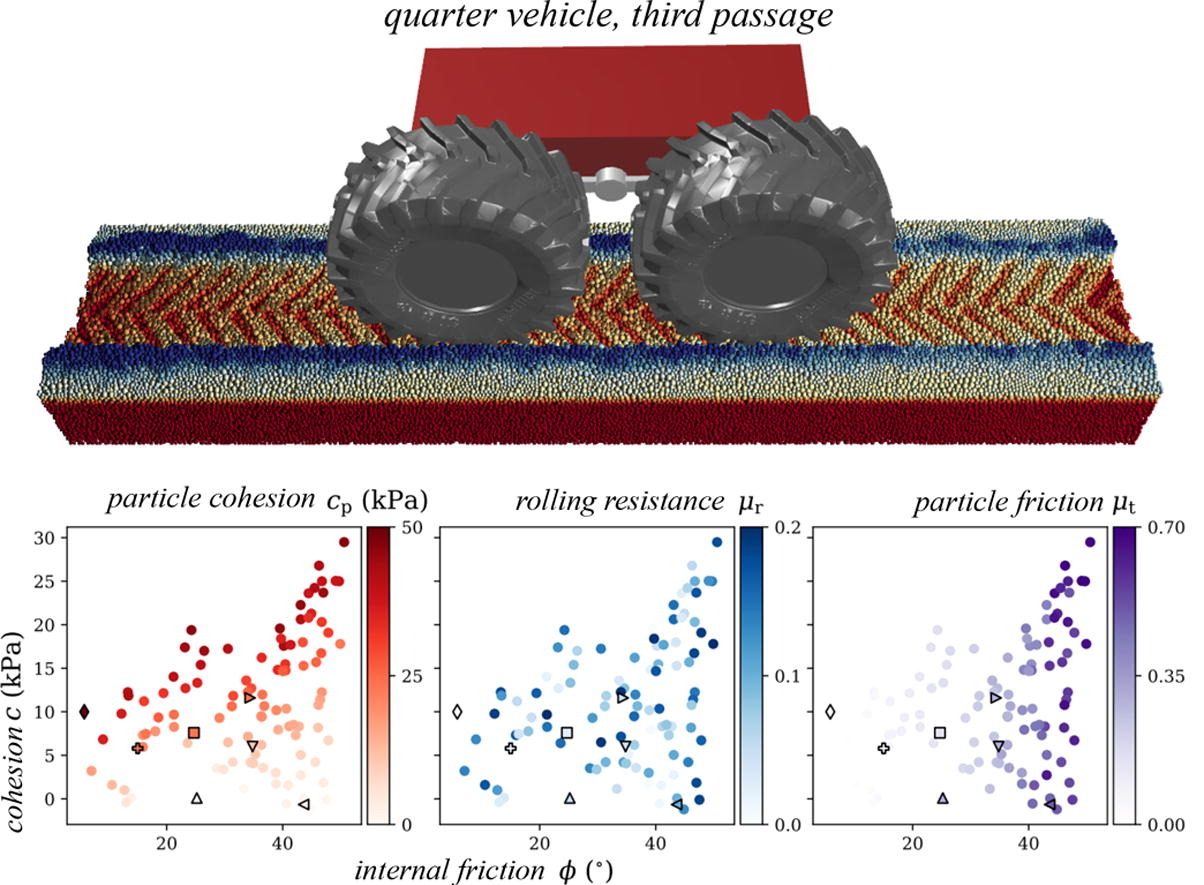

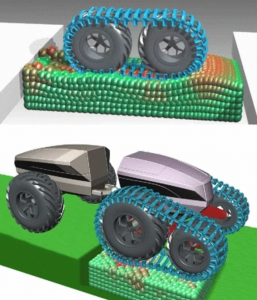

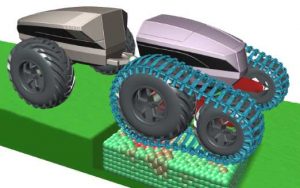

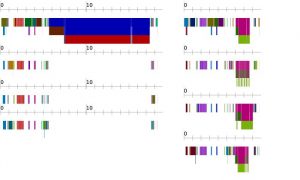

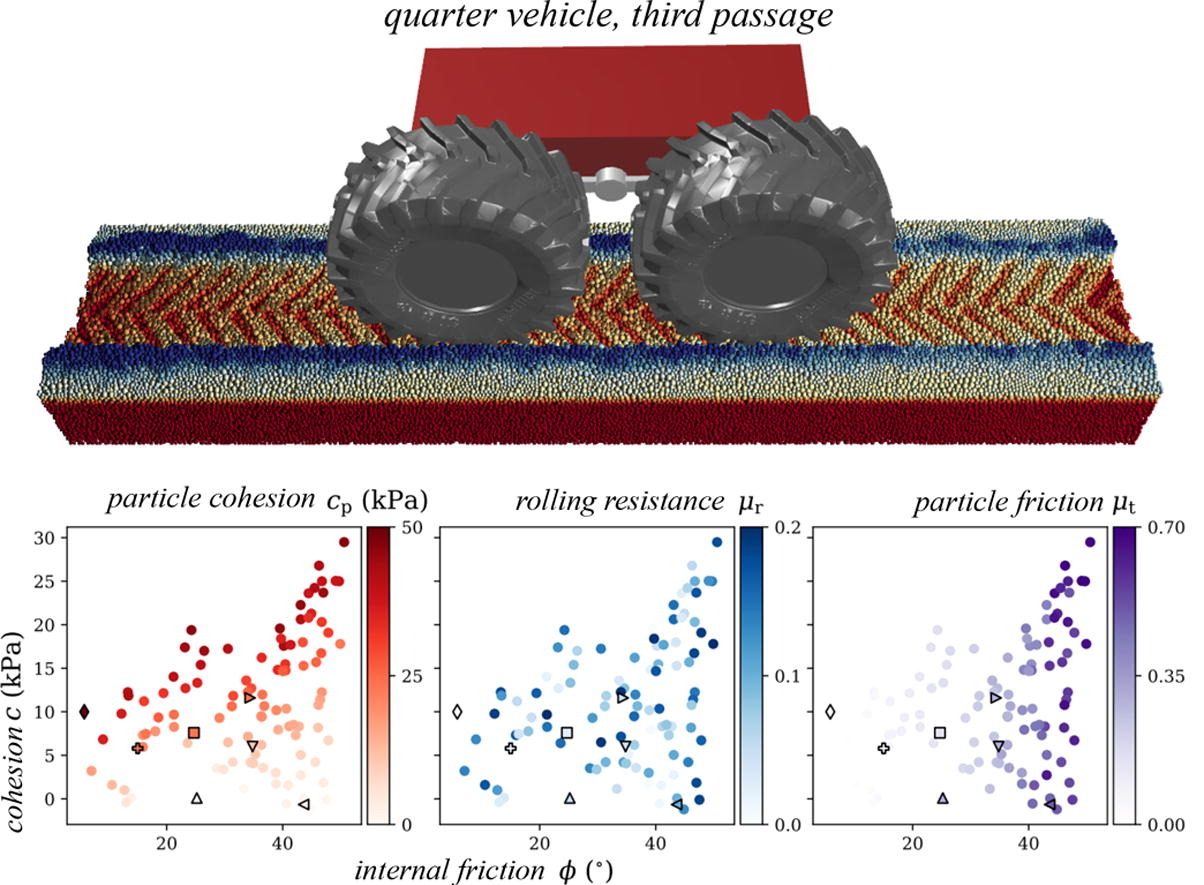

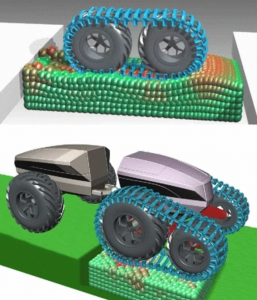

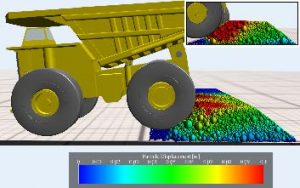

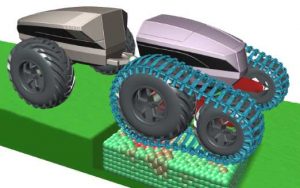

| Wiberg, Viktor; Servin, Martin; Nordfjell, Tomas: Discrete element modelling of large soil deformations under heavy vehicles. In: Journal of Terramechanics, vol. 93, pp. 11–21, 2021. @article{wiberg2021discrete,

title = {Discrete element modelling of large soil deformations under heavy vehicles},

author = {Viktor Wiberg and Martin Servin and Tomas Nordfjell},

url = {https://doi.org/10.1016/j.jterra.2020.10.002},

year = {2021},

date = {2021-01-01},

journal = {Journal of Terramechanics},

volume = {93},

pages = {11--21},

publisher = {Elsevier},

abstract = {This paper addresses the challenges of creating realistic models of soil for simulations of heavy vehicles on weak terrain. We modelled dense soils using the discrete element method with variable parameters for surface friction, normal cohesion, and rolling resistance. To find out what type of soils can be represented, we measured the internal friction and bulk cohesion of over 100 different virtual samples. To test the model, we simulated rut formation from a heavy vehicle with different loads and soil strengths. We conclude that the relevant space of dense frictional and frictional-cohesive soils can be represented and that the model is applicable for simulation of large deformations induced by heavy vehicles on weak terrain.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

This paper addresses the challenges of creating realistic models of soil for simulations of heavy vehicles on weak terrain. We modelled dense soils using the discrete element method with variable parameters for surface friction, normal cohesion, and rolling resistance. To find out what type of soils can be represented, we measured the internal friction and bulk cohesion of over 100 different virtual samples. To test the model, we simulated rut formation from a heavy vehicle with different loads and soil strengths. We conclude that the relevant space of dense frictional and frictional-cohesive soils can be represented and that the model is applicable for simulation of large deformations induced by heavy vehicles on weak terrain. |

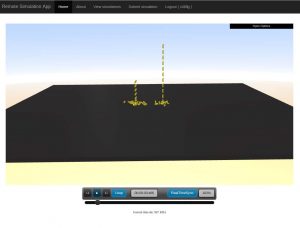

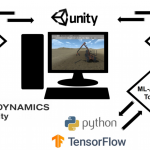

| Backman, Sofi; Lindmark, Daniel; Bodin, Kenneth; Servin, Martin; Mörk, Joakim; Löfgren, Håkan: Continuous control of an underground loader using deep reinforcement learning. In: Machines, vol. 9, no. 10, pp. 216, 2021. @article{backman2021continuous,

title = {Continuous control of an underground loader using deep reinforcement learning},

author = {Sofi Backman and Daniel Lindmark and Kenneth Bodin and Martin Servin and Joakim Mörk and Håkan Löfgren},

url = {https://www.mdpi.com/2075-1702/9/10/216

https://www.mdpi.com/2075-1702/9/10/216/pdf

https://www.algoryx.se/papers/drl-loader/

https://youtu.be/RzDTFZW26H0},

doi = { doi.org/10.3390/machines9100216},

year = {2021},

date = {2021-01-01},

journal = {Machines},

volume = {9},

number = {10},

pages = {216},

abstract = {Reinforcement learning control of an underground loader is investigated in simulated environment, using a multi-agent deep neural network approach. At the start of each loading cycle, one agent selects the dig position from a depth camera image of the pile of fragmented rock. A second agent is responsible for continuous control of the vehicle, with the goal of filling the bucket at the selected loading point, while avoiding collisions, getting stuck, or losing ground traction. It relies on motion and force sensors, as well as on camera and lidar. Using a soft actor-critic algorithm the agents learn policies for efficient bucket filling over many subsequent loading cycles, with clear ability to adapt to the changing environment. The best results, on average 75% of the max capacity, are obtained when including a penalty for energy usage in the reward.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

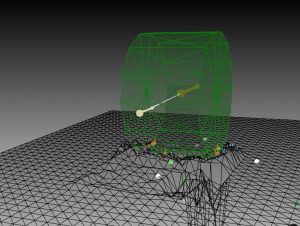

Reinforcement learning control of an underground loader is investigated in simulated environment, using a multi-agent deep neural network approach. At the start of each loading cycle, one agent selects the dig position from a depth camera image of the pile of fragmented rock. A second agent is responsible for continuous control of the vehicle, with the goal of filling the bucket at the selected loading point, while avoiding collisions, getting stuck, or losing ground traction. It relies on motion and force sensors, as well as on camera and lidar. Using a soft actor-critic algorithm the agents learn policies for efficient bucket filling over many subsequent loading cycles, with clear ability to adapt to the changing environment. The best results, on average 75% of the max capacity, are obtained when including a penalty for energy usage in the reward. |

| Andersson, Jennifer; Bodin, Kenneth; Lindmark, Daniel; Servin, Martin; Wallin, Erik: Reinforcement Learning Control of a Forestry Crane Manipulator. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2021), Sep. 27-Oct. 1st, 2021, Prague, Czech Republic (2021). arXiv:2103.02315, 2021. @article{andersson2021reinforcement,

title = {Reinforcement Learning Control of a Forestry Crane Manipulator},

author = {Jennifer Andersson and Kenneth Bodin and Daniel Lindmark and Martin Servin and Erik Wallin},

url = {https://arxiv.org/abs/2103.02315

https://arxiv.org/pdf/2103.02315

https://www.algoryx.se/papers/rlc-crane/

https://youtu.be/7xwMlS5uqxs},

year = {2021},

date = {2021-01-01},

journal = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2021), Sep. 27-Oct. 1st, 2021, Prague, Czech Republic (2021). arXiv:2103.02315},

abstract = {Forestry machines are heavy vehicles performing complex manipulation tasks in unstructured production forest environments. Together with the complex dynamics of the on-board hydraulically actuated cranes, the rough forest terrains have posed a particular challenge in forestry automation. In this study, the feasibility of applying reinforcement learning control to forestry crane manipulators is investigated in a simulated environment. Our results show that it is possible to learn successful actuator-space control policies for energy efficient log grasping by invoking a simple curriculum in a deep reinforcement learning setup. Given the pose of the selected logs, our best control policy reaches a grasping success rate of 97%. Including an energy-optimization goal in the reward function, the energy consumption is significantly reduced compared to control policies learned without incentive for energy optimization, while the increase in cycle time is marginal. The energy-optimization effects can be observed in the overall smoother motion and acceleration profiles during crane manipulation.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

Forestry machines are heavy vehicles performing complex manipulation tasks in unstructured production forest environments. Together with the complex dynamics of the on-board hydraulically actuated cranes, the rough forest terrains have posed a particular challenge in forestry automation. In this study, the feasibility of applying reinforcement learning control to forestry crane manipulators is investigated in a simulated environment. Our results show that it is possible to learn successful actuator-space control policies for energy efficient log grasping by invoking a simple curriculum in a deep reinforcement learning setup. Given the pose of the selected logs, our best control policy reaches a grasping success rate of 97%. Including an energy-optimization goal in the reward function, the energy consumption is significantly reduced compared to control policies learned without incentive for energy optimization, while the increase in cycle time is marginal. The energy-optimization effects can be observed in the overall smoother motion and acceleration profiles during crane manipulation. |

| Wallin, Erik; Servin, Martin: Data-driven model order reduction for granular media. In: Computational Particle Mechanics, pp. 1–14, 2021. @article{wallin2021data,

title = {Data-driven model order reduction for granular media},

author = {Erik Wallin and Martin Servin},

url = {http://umit.cs.umu.se/ddgranular/

https://doi.org/10.1007/s40571-020-00387-6

https://arxiv.org/pdf/2004.03349

https://arxiv.org/abs/2004.03349

https://youtu.be/YjwP9baTm-c?list=TLGGYuzbbp4IBtcxOTA0MjAyMQ},

year = {2021},

date = {2021-01-01},

journal = {Computational Particle Mechanics},

pages = {1--14},

publisher = {Springer},

abstract = {We investigate the use of reduced-order modelling to run discrete element simulations at higher speeds. Taking a data-driven approach, we run many offline simulations in advance and train a model to predict the velocity field from the mass distribution and system control signals. Rapid model inference of particle velocities replaces the intense process of computing contact forces and velocity updates. In coupled DEM and multibody system simulation the predictor model can be trained to output the interfacial reaction forces as well. An adaptive model order reduction technique is investigated, decomposing the media in domains of solid, liquid, and gaseous state. The model reduction is applied to solid and liquid domains where the particle motion is strongly correlated with the mean flow, while resolved DEM is used for gaseous domains. Using a ridge regression predictor, the performance is tested on simulations of a pile discharge and bulldozing. The measured accuracy is about 90% and 65%, respectively, and the speed-up range between 10 and 60},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

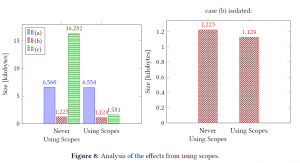

We investigate the use of reduced-order modelling to run discrete element simulations at higher speeds. Taking a data-driven approach, we run many offline simulations in advance and train a model to predict the velocity field from the mass distribution and system control signals. Rapid model inference of particle velocities replaces the intense process of computing contact forces and velocity updates. In coupled DEM and multibody system simulation the predictor model can be trained to output the interfacial reaction forces as well. An adaptive model order reduction technique is investigated, decomposing the media in domains of solid, liquid, and gaseous state. The model reduction is applied to solid and liquid domains where the particle motion is strongly correlated with the mean flow, while resolved DEM is used for gaseous domains. Using a ridge regression predictor, the performance is tested on simulations of a pile discharge and bulldozing. The measured accuracy is about 90% and 65%, respectively, and the speed-up range between 10 and 60 |

| Servin, Martin; Vesterlund, Folke; Wallin, Erik: Digital twins with embedded particle simulation. In: 14th World Congress in Computational Mechanics (WCCM) ECCOMAS Congress, Virtual, January 11-15, 2021, 2021. @inproceedings{servin2021digital,

title = {Digital twins with embedded particle simulation},

author = {Martin Servin and Folke Vesterlund and Erik Wallin},

url = {http://umu.diva-portal.org/smash/record.jsf?language=sv&pid=diva2%3A1499113&dswid=-3497

http://umu.diva-portal.org/smash/get/diva2:1499113/FULLTEXT01.pdf},

year = {2021},

date = {2021-01-01},

booktitle = {14th World Congress in Computational Mechanics (WCCM) ECCOMAS Congress, Virtual, January 11-15, 2021},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {inproceedings}

}

|

2020

|

| Andersson, Jennifer: Simulation-Driven Machine Learning Control of a Forestry Crane Manipulator. 2020. @mastersthesis{andersson2020simulation,

title = {Simulation-Driven Machine Learning Control of a Forestry Crane Manipulator},

author = {Jennifer Andersson},

url = {http://uu.diva-portal.org/smash/record.jsf?pid=diva2%3A1507839&dswid=-3497

http://uu.diva-portal.org/smash/get/diva2:1507839/FULLTEXT01.pdf},

year = {2020},

date = {2020-01-01},

abstract = {A forwarder is a forestry vehicle carrying felled logs from the forest harvesting site, thereby constituting an essential part of the modern forest harvesting cycle. Successful automation efforts can increase productivity and improve operator working conditions, but despite increasing levels of automation in industry today, forwarders have remained manually operated. In our work, the grasping motion of a hydraulic-actuated forestry crane manipulator is automated in a simulated environment using state-of-the-art deep reinforcement learning methods. Two approaches for single-log grasping are investigated; amulti-agent approach and a single-agent approach based on curriculum learning. We show that both approaches can yield a high grasping success rate. Given the position and orientation of the target log, the best control policy is able to successfully grasp 97.4% of target logs.Including incentive for energy optimization, we are able to reduce theaverage energy consumption by 58.4% compared to the non-energy optimized model, while maintaining 82.9% of the success rate. The energy optimized control policy results in an overall smoother crane motion andacceleration profile during grasping. The results are promising and provide a natural starting point for end-to-end automation of forestry crane manipulators in the real world.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

A forwarder is a forestry vehicle carrying felled logs from the forest harvesting site, thereby constituting an essential part of the modern forest harvesting cycle. Successful automation efforts can increase productivity and improve operator working conditions, but despite increasing levels of automation in industry today, forwarders have remained manually operated. In our work, the grasping motion of a hydraulic-actuated forestry crane manipulator is automated in a simulated environment using state-of-the-art deep reinforcement learning methods. Two approaches for single-log grasping are investigated; amulti-agent approach and a single-agent approach based on curriculum learning. We show that both approaches can yield a high grasping success rate. Given the position and orientation of the target log, the best control policy is able to successfully grasp 97.4% of target logs.Including incentive for energy optimization, we are able to reduce theaverage energy consumption by 58.4% compared to the non-energy optimized model, while maintaining 82.9% of the success rate. The energy optimized control policy results in an overall smoother crane motion andacceleration profile during grasping. The results are promising and provide a natural starting point for end-to-end automation of forestry crane manipulators in the real world. |

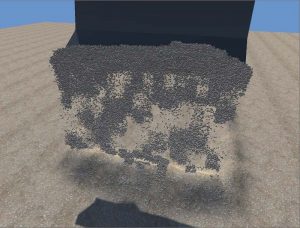

| Asplund, Philip: Real-Time Spherical Discretization. Department of Computing Science, Umeå University, Sweden, 2020. @mastersthesis{Asplund2020,

title = {Real-Time Spherical Discretization},

author = {Philip Asplund},

url = {https://www.algoryx.se/mainpage/wp-content/uploads/2021/04/REAL-TIME-SPHERICAL-DISCRETIZATION-Surface-rendering-and-upscaling-Philip-Asplund-Master_Thesis.pdf},

year = {2020},

date = {2020-01-01},

school = {Department of Computing Science, Umeå University, Sweden},

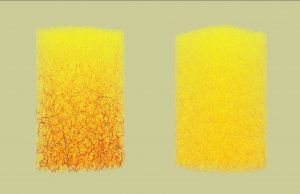

abstract = {This thesis explores a method for upscaling and increasing the visual fidelity of coarse soil simulation. This is done through the use of a High Resolution (HR)- based method that guides fine-scale particles which are then rendered using either surface rendering or rendering with particle meshes. This thesis also explores the idea of omitting direct calculation of the internal and external forces, and instead only use the velocity voxel grid generated from the coarse simulation. This is done to determine if the method can still reproduce natural soil movements of the fine-scale particles when simulating and rendering under realtime constraints.

The result shows that this method increases the visual fidelity of the rendering without a significant impact on the overall simulation run-time performance, while the fine-scale particles still produce movements that are perceived as natural. It also shows that the use of surface rendering does not need as high fine-scale particle resolution for the same perceived visual soil fidelity as when rendering with particle mesh.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

This thesis explores a method for upscaling and increasing the visual fidelity of coarse soil simulation. This is done through the use of a High Resolution (HR)- based method that guides fine-scale particles which are then rendered using either surface rendering or rendering with particle meshes. This thesis also explores the idea of omitting direct calculation of the internal and external forces, and instead only use the velocity voxel grid generated from the coarse simulation. This is done to determine if the method can still reproduce natural soil movements of the fine-scale particles when simulating and rendering under realtime constraints.

The result shows that this method increases the visual fidelity of the rendering without a significant impact on the overall simulation run-time performance, while the fine-scale particles still produce movements that are perceived as natural. It also shows that the use of surface rendering does not need as high fine-scale particle resolution for the same perceived visual soil fidelity as when rendering with particle mesh. |

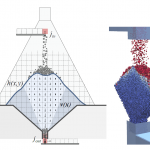

| Servin, Martin; Berglund, Tomas; Nystedt, Samuel: A multiscale model of terrain dynamics for real-time earthmoving simulation. In: arXiv preprint arXiv:2011.00459, 2020. @article{servin2020multiscale,

title = {A multiscale model of terrain dynamics for real-time earthmoving simulation},

author = {Martin Servin and Tomas Berglund and Samuel Nystedt},

url = {https://www.algoryx.se/papers/terrain/

https://arxiv.org/abs/2011.00459

https://arxiv.org/pdf/2011.00459.pdf

},

year = {2020},

date = {2020-01-01},

journal = {arXiv preprint arXiv:2011.00459},

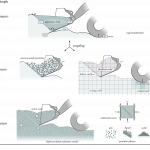

abstract = {A multiscale model for real-time simulation of terrain dynamics is explored. To represent the dynamics on different scales the model combines the description of soil as a continuous solid, as distinct particles and as rigid multibodies. The models are dynamically coupled to each other and to the earthmoving equipment. Agitated soil is represented by a hybrid of contacting particles and continuum solid, with the moving equipment and resting soil as geometric boundaries. Each zone of active soil is aggregated into distinct bodies, with the proper mass, momentum and frictional-cohesive properties, which constrain the equipment's multibody dynamics. The particle model parameters are pre-calibrated to the bulk mechanical parameters for a wide range of different soils. The result is a computationally efficient model for earthmoving operations that resolve the motion of the soil, using a fast iterative solver, and provide realistic forces and dynamic for the equipment, using a direct solver for high numerical precision. Numerical simulations of excavation and bulldozing operations are performed to validate the model and measure the computational performance. Reference data is produced using coupled discrete element and multibody dynamics simulations at relatively high resolution. The digging resistance and soil displacements with the real-time multiscale model agree with the reference model up to 10-25%, and run more than three orders of magnitude faster.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

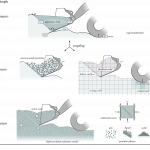

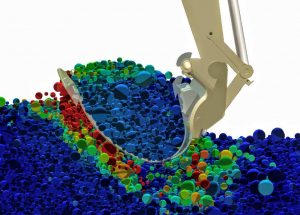

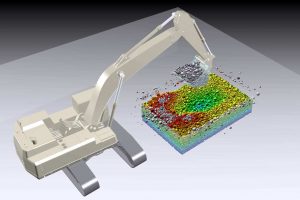

A multiscale model for real-time simulation of terrain dynamics is explored. To represent the dynamics on different scales the model combines the description of soil as a continuous solid, as distinct particles and as rigid multibodies. The models are dynamically coupled to each other and to the earthmoving equipment. Agitated soil is represented by a hybrid of contacting particles and continuum solid, with the moving equipment and resting soil as geometric boundaries. Each zone of active soil is aggregated into distinct bodies, with the proper mass, momentum and frictional-cohesive properties, which constrain the equipment's multibody dynamics. The particle model parameters are pre-calibrated to the bulk mechanical parameters for a wide range of different soils. The result is a computationally efficient model for earthmoving operations that resolve the motion of the soil, using a fast iterative solver, and provide realistic forces and dynamic for the equipment, using a direct solver for high numerical precision. Numerical simulations of excavation and bulldozing operations are performed to validate the model and measure the computational performance. Reference data is produced using coupled discrete element and multibody dynamics simulations at relatively high resolution. The digging resistance and soil displacements with the real-time multiscale model agree with the reference model up to 10-25%, and run more than three orders of magnitude faster. |

| Vikdahl, Martin: Streaming Data Models for Distributed Physics Simulation Workflows. Department of Computing Science, Umeå University, Sweden, 2020. @mastersthesis{Vikdahl2020,

title = {Streaming Data Models for Distributed Physics Simulation Workflows},

author = {Martin Vikdahl},

url = {http://urn.kb.se/resolve?urn=urn%3Anbn%3Ase%3Aumu%3Adiva-177599

https://www.algoryx.se/mainpage/wp-content/uploads/2021/04/Thesis-Martin.Vikdahl-Streaming.data_.models.for-distributed.physics.simulation.workflows.pdf},

year = {2020},

date = {2020-01-01},

school = {Department of Computing Science, Umeå University, Sweden},

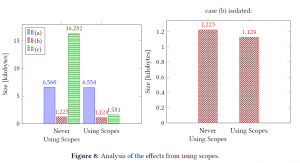

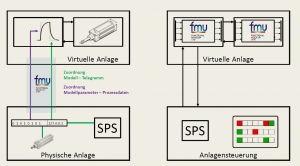

abstract = {This project explores the possibility of lowering the barrier of entry for integrating a physics engine into distributed organization-specific pipelines by providing an interface for communicating over the network between domain-specific tools. The approach uses an event-driven interface, both for transferring simulation models incrementally as event streams and for suggesting modifications of the models.

The proposed architecture uses a technique for storing and managing different versions of the simulation models that roughly aligns with the concept of event sourcing and allowed for communicating updates to models by only sending information about what had changed since the older version. The architecture also has a simple dependency management system between models that takes versioning into account by causally ordering dependencies. The solution allows for multiple simultaneous client users which could support connecting collaborative editing and visualization tools.

By implementing a prototype of the architecture it was concluded that the format could encode models into a compact stream of small, autonomous event messages, that could be used to replicate the original structure on the receiving end, but it was difficult to make a good quantitative evaluation without access to a large collection of representative example models, because the size distributions depended on the usage.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

This project explores the possibility of lowering the barrier of entry for integrating a physics engine into distributed organization-specific pipelines by providing an interface for communicating over the network between domain-specific tools. The approach uses an event-driven interface, both for transferring simulation models incrementally as event streams and for suggesting modifications of the models.

The proposed architecture uses a technique for storing and managing different versions of the simulation models that roughly aligns with the concept of event sourcing and allowed for communicating updates to models by only sending information about what had changed since the older version. The architecture also has a simple dependency management system between models that takes versioning into account by causally ordering dependencies. The solution allows for multiple simultaneous client users which could support connecting collaborative editing and visualization tools.

By implementing a prototype of the architecture it was concluded that the format could encode models into a compact stream of small, autonomous event messages, that could be used to replicate the original structure on the receiving end, but it was difficult to make a good quantitative evaluation without access to a large collection of representative example models, because the size distributions depended on the usage. |

2019

|

| Servin, Martin; Wallin, Erik: Reduced order modeling for realtime simulation with granular materials. In: VI International Conference on Particle-Based Methods-Fundamentals and Applications-PARTICLES. Barcelona, Spain, October 28-30, 2019, 2019. @inproceedings{servin2019reduced,

title = {Reduced order modeling for realtime simulation with granular materials},

author = {Martin Servin and Erik Wallin},

url = {http://umu.diva-portal.org/smash/record.jsf?language=sv&pid=diva2%3A1319374&dswid=-61

http://umu.diva-portal.org/smash/get/diva2:1319374/SUMMARY01.pdf

},

year = {2019},

date = {2019-01-01},

booktitle = {VI International Conference on Particle-Based Methods-Fundamentals and Applications-PARTICLES. Barcelona, Spain, October 28-30, 2019},

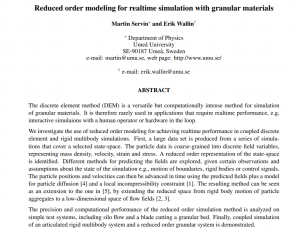

abstract = {The discrete element method (DEM) is a versatile but computationally intense method for simulation of granular materials. It is therefore rarely used in applications that require realtime performance, e.g, interactive simulaions with a human operator or hardware in the loop.

We investigate the use of reduced order modeling for achieving realtime performance in coupled discrete element and rigid multibody simulations. First, a large data set is produced from a series of simulations that cover a selected state-space. The particle data is coarse-grained into discrete field variables, representing mass density, velocity, strain and stress. A reduced order representation of the state-space is identified. Different methods for predicting the fields are explored, given certain observations and assumptions about the state of the simulation e.g., motion of boundaries, rigid bodies or control signals. The particle positions and velocities can then be advanced in time using the predicted fields plus a model for particle diffusion [4] and a local incompressibility constraint [1]. The resulting method can be seen as an extension to the one in [5], by extending the reduced space from rigid body motion of particle aggregates to a low-dimensional space of flow fields [2, 3].

The precision and computational performance of the reduced order simulation method is analyzed on simple test systems, including silo flow and a blade cutting a granular bed. Finally, coupled simulation of an articulated rigid multibody system and a reduced order granular system is demonstrated.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {inproceedings}

}

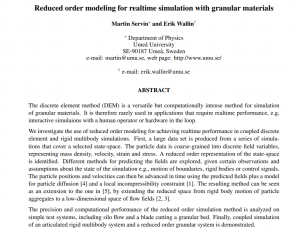

The discrete element method (DEM) is a versatile but computationally intense method for simulation of granular materials. It is therefore rarely used in applications that require realtime performance, e.g, interactive simulaions with a human operator or hardware in the loop.

We investigate the use of reduced order modeling for achieving realtime performance in coupled discrete element and rigid multibody simulations. First, a large data set is produced from a series of simulations that cover a selected state-space. The particle data is coarse-grained into discrete field variables, representing mass density, velocity, strain and stress. A reduced order representation of the state-space is identified. Different methods for predicting the fields are explored, given certain observations and assumptions about the state of the simulation e.g., motion of boundaries, rigid bodies or control signals. The particle positions and velocities can then be advanced in time using the predicted fields plus a model for particle diffusion [4] and a local incompressibility constraint [1]. The resulting method can be seen as an extension to the one in [5], by extending the reduced space from rigid body motion of particle aggregates to a low-dimensional space of flow fields [2, 3].

The precision and computational performance of the reduced order simulation method is analyzed on simple test systems, including silo flow and a blade cutting a granular bed. Finally, coupled simulation of an articulated rigid multibody system and a reduced order granular system is demonstrated. |

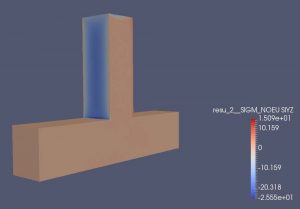

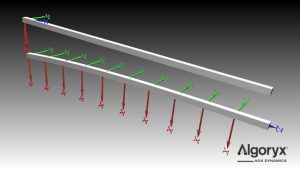

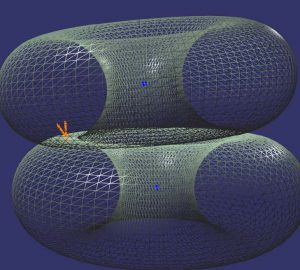

| Syrén, Ludvig: A method for introducing flexibility in rigid multibodies from reduced order elastic models. Department of Physics, Umeå University, 2019. @mastersthesis{Syren2019,

title = {A method for introducing flexibility in rigid multibodies from reduced order elastic models},

author = {Ludvig Syrén},

url = {http://urn.kb.se/resolve?urn=urn%3Anbn%3Ase%3Aumu%3Adiva-160417

https://umu.diva-portal.org/smash/get/diva2:1326569/FULLTEXT01.pdf

},

year = {2019},

date = {2019-01-01},

school = {Department of Physics, Umeå University},

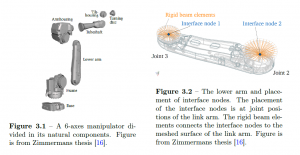

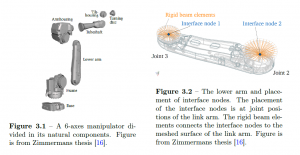

abstract = {In multibody dynamics simulation of robots and vehicles it is common to model the systems as being composed of mainly rigid bodies with articulation joints. With the trend to more lightweight robots, however, the structural flexibility of the robots link’s needs to be considered for realistic dynamic simulations. The link’s geometries are complex and finite element models (FEM) are required to compute the deformations. However, FEM includes too many degrees of freedom for time-efficient dynamics simulation. A popular method is to generate reduced order models from the FE models, but with much fewer degrees of freedom, for fast and precise simulations. In this thesis a method for introducing reduced order models in rigid multibody systems was developed. The method is to divide a rigid body into two rigid bodies. Their relative movement is described by a six degree of freedom restoration force, determined with a reduced order model from Guyan reduction (static condensation). The method was validated for quasistatic deformation of a homogenous beam, a robot link arm with a more complex geometry and in multibody dynamics simulations. Finally the method was tested in simulation of a complete ABB robot with joint actuators, and any significant differences in the motion of the robot tool centre point due to replacing a rigid link arm by a flexible one was demonstrated.The method show good results for computing deformations of the homogenous beam, of the link arm and in the multibody simulation. The differences observed in simulation of a complete robot was expected and demonstrated the method to be applicable in robotic simulations.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

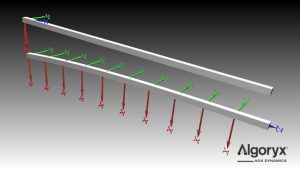

In multibody dynamics simulation of robots and vehicles it is common to model the systems as being composed of mainly rigid bodies with articulation joints. With the trend to more lightweight robots, however, the structural flexibility of the robots link’s needs to be considered for realistic dynamic simulations. The link’s geometries are complex and finite element models (FEM) are required to compute the deformations. However, FEM includes too many degrees of freedom for time-efficient dynamics simulation. A popular method is to generate reduced order models from the FE models, but with much fewer degrees of freedom, for fast and precise simulations. In this thesis a method for introducing reduced order models in rigid multibody systems was developed. The method is to divide a rigid body into two rigid bodies. Their relative movement is described by a six degree of freedom restoration force, determined with a reduced order model from Guyan reduction (static condensation). The method was validated for quasistatic deformation of a homogenous beam, a robot link arm with a more complex geometry and in multibody dynamics simulations. Finally the method was tested in simulation of a complete ABB robot with joint actuators, and any significant differences in the motion of the robot tool centre point due to replacing a rigid link arm by a flexible one was demonstrated.The method show good results for computing deformations of the homogenous beam, of the link arm and in the multibody simulation. The differences observed in simulation of a complete robot was expected and demonstrated the method to be applicable in robotic simulations. |

2018

|

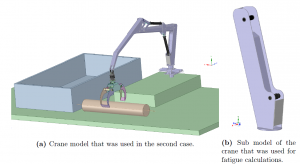

| Lundkvist, Anna: Fatigue analysis - local geometry optimization. 2018. @mastersthesis{Lundkvist2018,

title = {Fatigue analysis - local geometry optimization},

author = {Anna Lundkvist},

url = {http://www.diva-portal.org/smash/get/diva2:1251131/FULLTEXT01.pdf

http://urn.kb.se/resolve?urn=urn%3Anbn%3Ase%3Aumu%3Adiva-152084},

year = {2018},

date = {2018-07-09},

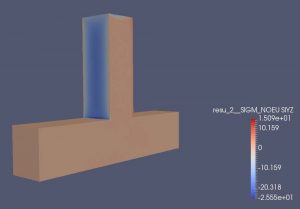

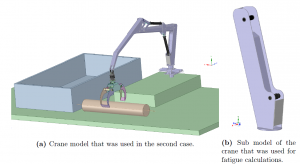

abstract = {The cause of fatigue failure is repeated loads, that cause cracks to appear and grow even if the loads are far below the static load that would make a structure fail. High-cycle fatigue, which this project will focus on, is characterized by linear elastic stress and only fails after a large amount of loading cycles. While fatigue is the most common cause of failure in structures, it is not feasible to calculate fatigue damage analytically. The aim of this project was to develop, implement and test a workflow that unifies the wide range of physical scales and transient features that are relevant to fatigue analysis of complex dynamic machinery. The workflow should take both system-level and local aspects into account. The goal was to address both the global and local while still keeping practical feasibility and simulation performance in mind. The resulting unified fatigue analysis method was then used on several test cases and illustrated from a local geometry optimization perspective.

The workflow contains the following steps: First the model is to be simulated in order to get the load history. Then the finite element method (FEM) is used to make a submodel of the component that is to be analyzed. The submodel is subjected to forces and moments, and then the stress is extracted from the areas of interest in the model. Thus, a linear relation for the stress can be calculated. The stress history is calculated by putting the load history into the stress relation. Using established fatigue analysis methods like rainflow counting and the Palmgren-Miner rule the fatigue life is then calculated.

This project only had its focus on the FEM submodel part of the fatigue workflow. The geometry of the submodel should then be able to be optimized for the longest fatigue life.

The workflow was tested on several test cases. The first one was a simple upsidedown T-shaped component that was made by welding the parts together. The same component but with no weld, as if it had been moulded, was the next case. The final case was a component from the base of a crane. Two things were analyzed on this case. The first was the fatigue life around a hole in which a shaft was welded. The other was optimizing to find the best position for a hook to be welded onto the surface on the component.

},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

The cause of fatigue failure is repeated loads, that cause cracks to appear and grow even if the loads are far below the static load that would make a structure fail. High-cycle fatigue, which this project will focus on, is characterized by linear elastic stress and only fails after a large amount of loading cycles. While fatigue is the most common cause of failure in structures, it is not feasible to calculate fatigue damage analytically. The aim of this project was to develop, implement and test a workflow that unifies the wide range of physical scales and transient features that are relevant to fatigue analysis of complex dynamic machinery. The workflow should take both system-level and local aspects into account. The goal was to address both the global and local while still keeping practical feasibility and simulation performance in mind. The resulting unified fatigue analysis method was then used on several test cases and illustrated from a local geometry optimization perspective.

The workflow contains the following steps: First the model is to be simulated in order to get the load history. Then the finite element method (FEM) is used to make a submodel of the component that is to be analyzed. The submodel is subjected to forces and moments, and then the stress is extracted from the areas of interest in the model. Thus, a linear relation for the stress can be calculated. The stress history is calculated by putting the load history into the stress relation. Using established fatigue analysis methods like rainflow counting and the Palmgren-Miner rule the fatigue life is then calculated.

This project only had its focus on the FEM submodel part of the fatigue workflow. The geometry of the submodel should then be able to be optimized for the longest fatigue life.

The workflow was tested on several test cases. The first one was a simple upsidedown T-shaped component that was made by welding the parts together. The same component but with no weld, as if it had been moulded, was the next case. The final case was a component from the base of a crane. Two things were analyzed on this case. The first was the fatigue life around a hole in which a shaft was welded. The other was optimizing to find the best position for a hook to be welded onto the surface on the component.

|

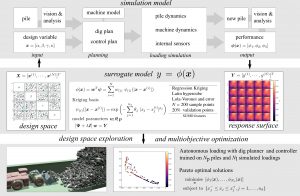

| Lindmark, Daniel M; Servin, Martin: Computational exploration of robotic rock loading. In: Robotics and Autonomous Systems, vol. 106, pp. 117–129, 2018. @article{lindmark2018computational,

title = {Computational exploration of robotic rock loading},

author = {Daniel M Lindmark and Martin Servin},

url = {http://umit.cs.umu.se/modsimcomplmech/docs/papers/computational_exploration.pdf

http://umit.cs.umu.se/loading/

https://vimeo.com/230986207},

doi = {10.1016/j.robot.2018.04.010},

year = {2018},

date = {2018-01-01},

journal = {Robotics and Autonomous Systems},

volume = {106},

pages = {117--129},

publisher = {North-Holland},

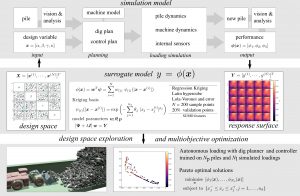

abstract = {A method for simulation-based development of robotic rock loading systems is described and tested. The idea is to first formulate a generic loading strategy as a function of the shape of the rock pile, the kinematics of the machine and a set of motion design variables that will be used by the autonomous control system. The relation between the loading strategy and resulting performance is then explored systematically using contacting multibody dynamics simulation, multiobjective optimisation and surrogate modelling. With the surrogate model it is possible to find Pareto optimal loading strategies for dig plans that are adapted to the current shape of the pile. The method is tested on a load–haul–dump machine loading from a large muck pile in an underground mine, with the loading performance measured by productivity, machine wear and rock debris spill that cause interruptions.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {article}

}

A method for simulation-based development of robotic rock loading systems is described and tested. The idea is to first formulate a generic loading strategy as a function of the shape of the rock pile, the kinematics of the machine and a set of motion design variables that will be used by the autonomous control system. The relation between the loading strategy and resulting performance is then explored systematically using contacting multibody dynamics simulation, multiobjective optimisation and surrogate modelling. With the surrogate model it is possible to find Pareto optimal loading strategies for dig plans that are adapted to the current shape of the pile. The method is tested on a load–haul–dump machine loading from a large muck pile in an underground mine, with the loading performance measured by productivity, machine wear and rock debris spill that cause interruptions. |

| Markgren, Hanna: Fatigue analysis - system parameters optimization. Department of Physics, Umeå University, 2018. @mastersthesis{Markgren2018,

title = {Fatigue analysis - system parameters optimization},

author = {Hanna Markgren},

url = {https://umu.diva-portal.org/smash/get/diva2:1247557/FULLTEXT01.pdf

http://urn.kb.se/resolve?urn=urn%3Anbn%3Ase%3Aumu%3Adiva-151755},

year = {2018},

date = {2018-01-01},

school = {Department of Physics, Umeå University},

abstract = {For a mechanical system exposed to repeated cyclic loads fatigue is one of the most common reasons for the system to fail. However fatigue failure calculations are not that well developed. Often when fatigue calculations are made they are done with standard loads and simplified cases.

The fatigue life is the time from start of use until the system fails due to fatigue and there does exist some building blocks to calculate the fatigue life. The aim for this project was to put these building blocks together in a workflow that ca be used for calculations of the fatigue life.

The workflow was built so that it should be easy to follow for any type of me- chanical system. The start of the workflow is the load history of the system. This is then converted into a stress history that is used for the calculations of the fatigue life. Finally the workflow was tested with two test cases to see if it was possible to use.

In Algoryx Momentum the model for each case was set up and then the load history was extracted for each time step during the simulation. To convert the load history to stress history FEM calculations was needed, this was however not a part of this project so the constants to convert loads to stress was given. Then with the stress history in place it was possible to calculate the fatigue life.

The results from both test cases were that it was possible to follow every step of the workflow and by this use the workflow to calculate the fatigue life. The second test also showed that with an optimization the system was improved and this resulted in a longer lifetime.

To conclude the workflow seems to work as expected and is quite easy to follow. The result given by using the workflow shows the fatigue life, which was the target for the project. However, to be able to evaluate the workflow fully and understand how well the resluts can be trusted a comparison with empiric data would be needed. Still the results from the tests are that the workflow seem to give reasonable results when calculating fatigue life.},

keywords = {Algoryx},

pubstate = {published},

tppubtype = {mastersthesis}

}

For a mechanical system exposed to repeated cyclic loads fatigue is one of the most common reasons for the system to fail. However fatigue failure calculations are not that well developed. Often when fatigue calculations are made they are done with standard loads and simplified cases.

The fatigue life is the time from start of use until the system fails due to fatigue and there does exist some building blocks to calculate the fatigue life. The aim for this project was to put these building blocks together in a workflow that ca be used for calculations of the fatigue life.

The workflow was built so that it should be easy to follow for any type of me- chanical system. The start of the workflow is the load history of the system. This is then converted into a stress history that is used for the calculations of the fatigue life. Finally the workflow was tested with two test cases to see if it was possible to use.

In Algoryx Momentum the model for each case was set up and then the load history was extracted for each time step during the simulation. To convert the load history to stress history FEM calculations was needed, this was however not a part of this project so the constants to convert loads to stress was given. Then with the stress history in place it was possible to calculate the fatigue life.

The results from both test cases were that it was possible to follow every step of the workflow and by this use the workflow to calculate the fatigue life. The second test also showed that with an optimization the system was improved and this resulted in a longer lifetime.

To conclude the workflow seems to work as expected and is quite easy to follow. The result given by using the workflow shows the fatigue life, which was the target for the project. However, to be able to evaluate the workflow fully and understand how well the resluts can be trusted a comparison with empiric data would be needed. Still the results from the tests are that the workflow seem to give reasonable results when calculating fatigue life. |

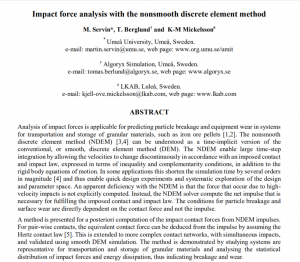

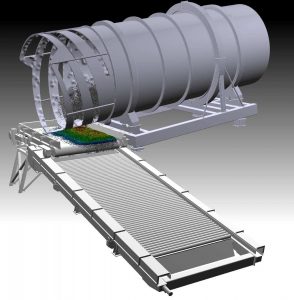

| Berglund, Tomas; Mickelsson, Kjell-Ove; Servin, Martin: Virtual commissioning of a mobile ore chute. The 9nth International Conference on Conveying and Handling of Particulate Solids (CHoPS), London, UK (2018), vol. 10, 2018. @conference{berglund2018virtual,

title = {Virtual commissioning of a mobile ore chute},

author = {Tomas Berglund and Kjell-Ove Mickelsson and Martin Servin},

url = {http://umit.cs.umu.se/modsimcomplmech/docs/papers/vcmoc_chops.pdf

http://umit.cs.umu.se/chute/

https://www.linkedin.com/pulse/virtual-commissioning-mobile-ore-chute-martin-servin/

},

year = {2018},

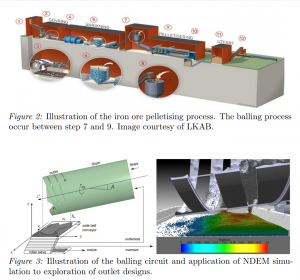

date = {2018-01-01},